Neurons teach rapid learning

For the first time, a physical neural network has been shown to learn and remember ‘on the fly’.

For the first time, a physical neural network has been shown to learn and remember ‘on the fly’.

The new system - inspired by and similar to the way the brain’s neurons work - could be used to develop efficient and low-energy machine intelligence for more complex, real-world learning and memory tasks.

“The findings demonstrate how brain-inspired learning and memory functions using nanowire networks can be harnessed to process dynamic, streaming data,” says Ruomin Zhu, a PhD student from the University of Sydney Nano Institute and School of Physics.

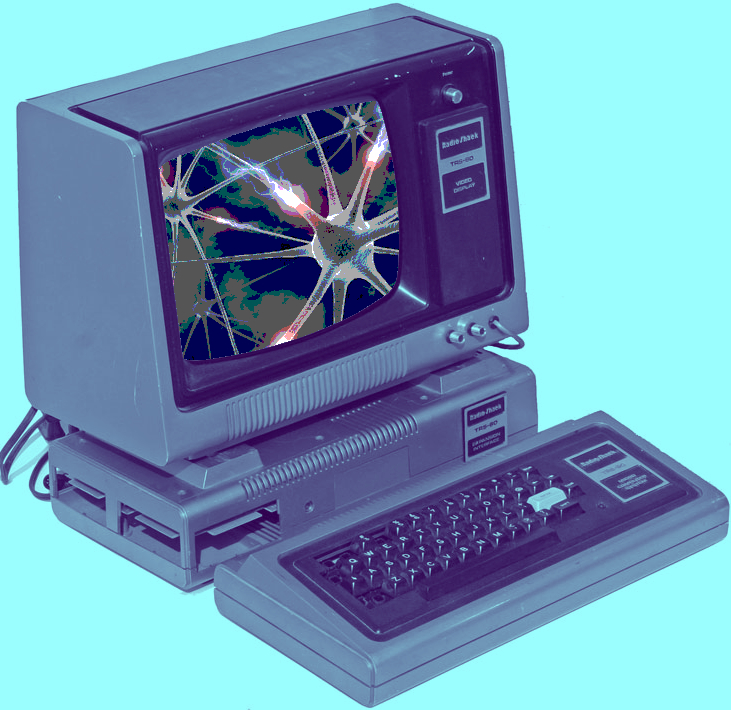

Nanowire networks are made up of tiny wires that are just billionths of a metre in diameter. The wires arrange themselves into patterns reminiscent of the children’s game ‘Pick-up Sticks’, mimicking neural networks, like those in the brain.

These networks can be used to perform specific information processing tasks.

Memory and learning tasks are achieved using simple algorithms that respond to changes in electronic resistance at junctions where the nanowires overlap.

Known as ‘resistive memory switching’, this function is created when electrical inputs encounter changes in conductivity, similar to what happens with synapses in the brain.

In this study, researchers used the network to recognise and remember sequences of electrical pulses corresponding to images.

Supervising researcher Professor Zdenka Kuncic said the memory task was similar to remembering a phone number.

The network was also used to perform a benchmark image recognition task, accessing images in the MNIST database of handwritten digits, a collection of 70,000 small greyscale images used in machine learning.

“Our previous research established the ability of nanowire networks to remember simple tasks. This work has extended these findings by showing tasks can be performed using dynamic data accessed online,” she said.

“This is a significant step forward as achieving an online learning capability is challenging when dealing with large amounts of data that can be continuously changing. A standard approach would be to store data in memory and then train a machine learning model using that stored information. But this would chew up too much energy for widespread application.

“Our novel approach allows the nanowire neural network to learn and remember ‘on the fly’, sample by sample, extracting data online, thus avoiding heavy memory and energy usage.”

Mr Zhu said there were other advantages when processing information online.

“If the data is being streamed continuously, such as it would be from a sensor for instance, machine learning that relied on artificial neural networks would need to have the ability to adapt in real-time, which they are currently not optimised for,” he said.

In the latest study, the nanowire neural network displayed a benchmark machine learning capability, scoring 93.4 percent in correctly identifying test images.

The memory task involved recalling sequences of up to eight digits.

For both tasks, data was streamed into the network to demonstrate its capacity for online learning and to show how memory enhances that learning.

Print

Print