Deep-thinking study boosts memory maths

Experts have updated their assumptions of the brain’s memory capacity.

Experts have updated their assumptions of the brain’s memory capacity.

“This is a real bombshell in the field of neuroscience,” says Terry Sejnowski, co-senior author of a new paper published in eLife.

“Our new measurements of the brain's memory capacity increase conservative estimates by a factor of 10 to at least a petabyte, in the same ballpark as the World Wide Web,” he said.

“We discovered the key to unlocking the design principle for how hippocampal neurons function with low energy but high computation power.”

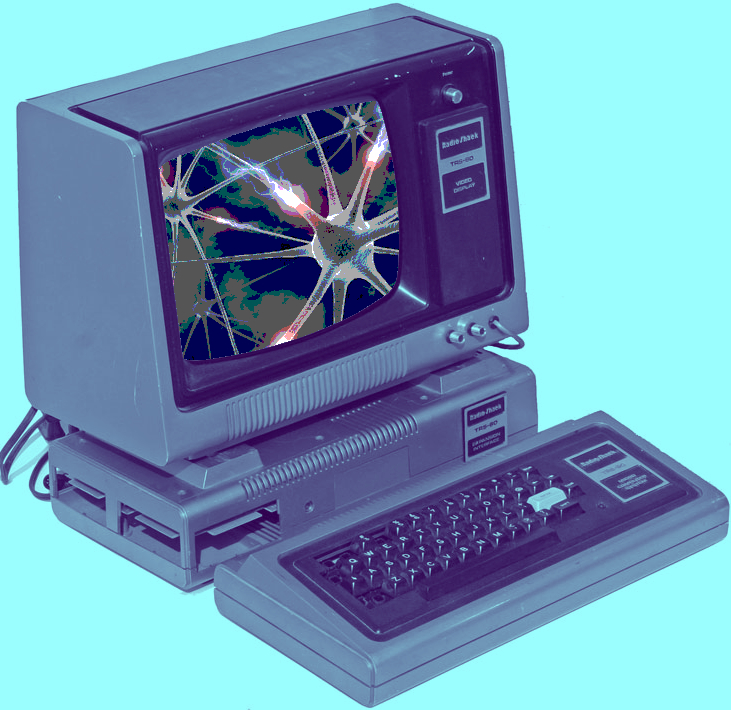

Our memories and thoughts are the result of patterns of electrical and chemical activity in the brain.

A key part of the activity happens when branches of neurons, much like electrical wire, interact at certain junctions, known as synapses.

An output 'wire' (an axon) from one neuron connects to an input 'wire' (a dendrite) of a second neuron. Signals travel across the synapse as chemicals called neurotransmitters to tell the receiving neuron whether to convey an electrical signal to other neurons. Each neuron can have thousands of these synapses with thousands of other neurons.

The research team reconstructed every dendrite, axon, glial process, and synapse from a volume of hippocampus the size of a single red blood cell.

When they did, they were bewildered by the complexity and diversity amongst the synapses.

In some cases, a single axon from one neuron formed two synapses reaching out to a single dendrite of a second neuron, signifying that the first neuron seemed to be sending a duplicate message to the receiving neuron.

This duplicity occurs about 10 per cent of the time in the hippocampus, but the researchers did not think much of it at first.

But when they measured the difference in size between the two very similar synapses, they were surprised to discover they were nearly identical.

“We were amazed to find that the difference in the sizes of the pairs of synapses were very small, on average, only about eight per cent different in size. No one thought it would be such a small difference. This was a curveball from nature,” said researcher Tom Bartol.

Because the memory capacity of neurons is dependent upon synapse size, this eight per cent difference turned out to be a key number the team could then plug into their algorithmic models of the brain to measure how much information could potentially be stored in synaptic connections.

“Our data suggests there are 10 times more discrete sizes of synapses than previously thought,” says Bartol.

In computer terms, 26 sizes of synapses correspond to about 4.7 ‘bits’ of information.

Previously, it was thought that the brain was capable of just one to two bits for short and long memory storage in the hippocampus.

“This is roughly an order of magnitude of precision more than anyone has ever imagined,” says Sejnowski.

The study also helped solve another mystery of the brain – how a signal travelling from one neuron to another can function by only activating that second neuron only 10 to 20 per cent of the time.

One answer, it seems, is in the constant adjustment of synapses, averaging out their success and failure rates over time.

The team used their new data and a statistical model to find out how many signals it would take a pair of synapses to get to that eight percent difference.

They calculated that for the smallest synapses, about 1,500 events cause a change in their size/ability (20 minutes) and for the largest synapses, only a couple hundred signalling events (1 to 2 minutes) cause a change.

“This means that every 2 or 20 minutes, your synapses are going up or down to the next size. The synapses are adjusting themselves according to the signals they receive,” says Bartol.

“The reality of the precision is truly remarkable and lays the foundation for whole new ways to think about brains and computers,” says researcher Kristen Harris.

“The work resulting from this collaboration has opened a new chapter in the search for learning and memory mechanisms.”

The findings also offer a valuable explanation for the brain's surprising efficiency. The waking adult brain generates only about 20 watts of continuous power - as much as a very dim light bulb.

“This trick of the brain absolutely points to a way to design better computers,” says Sejnowski.

“Using probabilistic transmission turns out to be as accurate and require much less energy for both computers and brains.”

Print

Print